Dongqi Pu

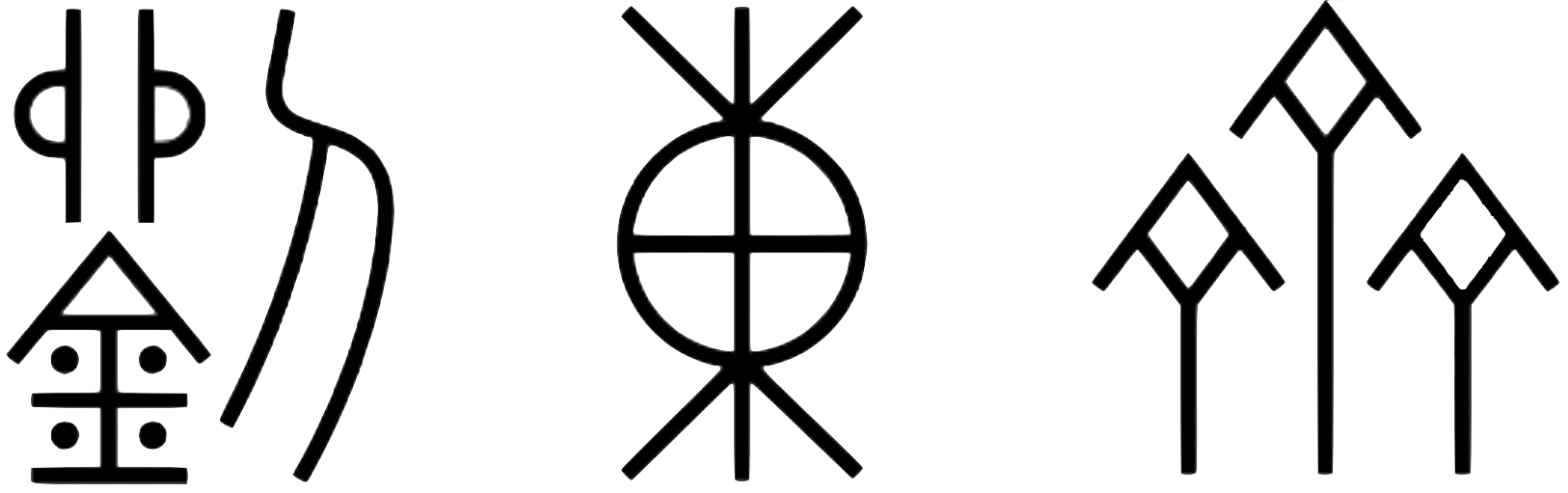

My name in Oracle bone script:

Doctoral researcher in Computational Linguistics

Campus C7.4, Saarland University, 66123, Germany

dongqi.me [AT] gmail.com

Incorporating Distributions of Discourse Structure for Long Document Abstractive Summarization

Abstract:

For text summarization, the role of discourse structure is pivotal in discerning the core content of a text. Regrettably, prior studies on incorporating Rhetorical Structure Theory (RST) into transformer-based summarization models only consider the nuclearity annotation, thereby overlooking the variety of discourse relation types. This paper introduces the `RSTformer’, a novel summarization model that comprehensively incorporates both the types and uncertainty of rhetorical relations. Our RST-attention mechanism, rooted in document-level rhetorical structure, is an extension of the recently devised Longformer framework. Through rigorous evaluation, the model proposed herein exhibits significant superiority over state-of-the-art models, as evidenced by its notable performance on several automatic metrics and human evaluation.

Code:

Code is available at: https://github.com/dongqi-me/RSTformer

Citation:

BibTeX:

@inproceedings{pu-etal-2023-incorporating,

title = "Incorporating Distributions of Discourse Structure for Long Document Abstractive Summarization",

author = "Pu, Dongqi and

Wang, Yifan and

Demberg, Vera",

editor = "Rogers, Anna and

Boyd-Graber, Jordan and

Okazaki, Naoaki",

booktitle = "Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers)",

month = jul,

year = "2023",

address = "Toronto, Canada",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2023.acl-long.306",

doi = "10.18653/v1/2023.acl-long.306",

pages = "5574--5590"

}

ACL

Dongqi Pu, Yifan Wang, and Vera Demberg. 2023. Incorporating Distributions of Discourse Structure for Long Document Abstractive Summarization. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 5574–5590, Toronto, Canada. Association for Computational Linguistics.